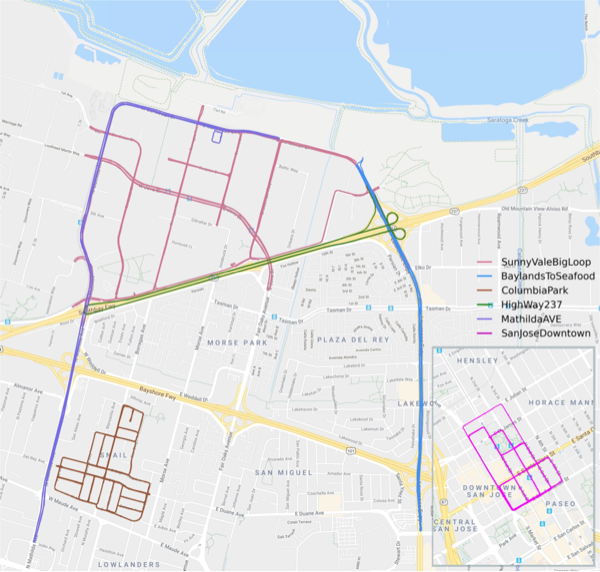

Bridge

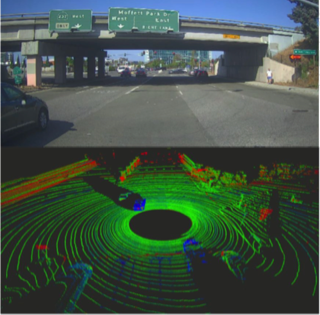

Overpass

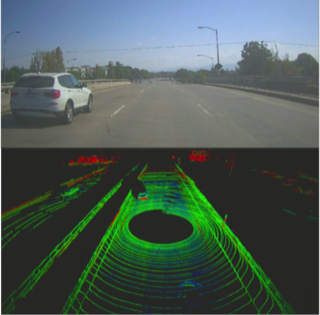

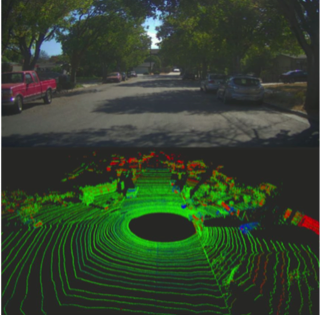

Tree-lined road

Intersection

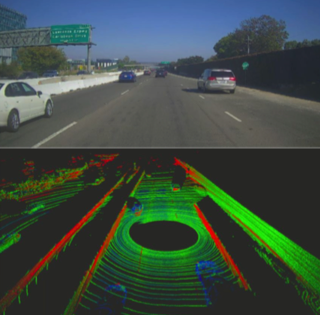

Highway

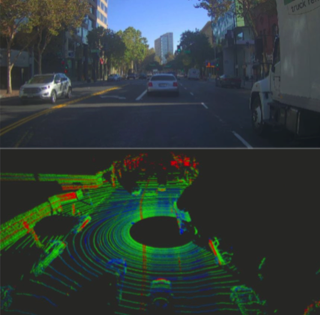

Downtown

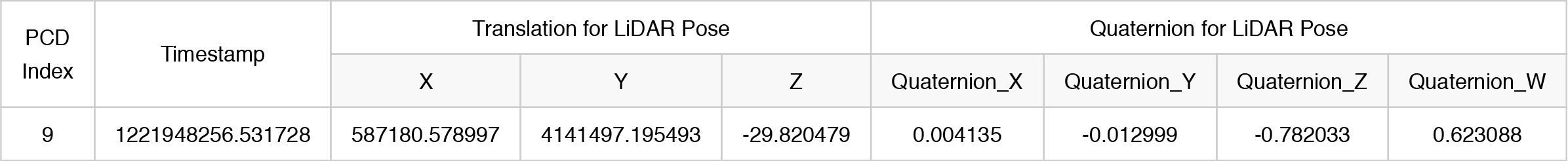

**@brief load poses file.

* @param <pose_file_path> path of pose file.

* @param <indexs> index of PCD files.

* @param <timestamps> timestamp of PCD files.

* @param <poses> the pose of PCD files, including translation and quaternion.

*/

bool load_pcd_poses(

const std::string& pose_file_path,

std::vector<int>& indexs,

std::vector<std::string>& timestamps,

std::vector<Eigen::Affine3d>& poses) {

std::ifstream inf(pose_file_path.c_str());

if (!inf) {

return false;

}

int index = 0;

std::string timestamp;

double tran_x = 0.0;

double tran_y = 0.0;

double tran_z = 0.0;

double quat_x = 0.0;

double quat_y = 0.0;

double quat_z = 0.0;

double quat_r = 0.0;

while(inf >> index >> timestamp >> tran_x >> tran_y

>> tran_z >> quat_x >> quat_y >> quat_z >> quat_r) {

indexs.push_back(index);

timestamps.push_back(timestamp);

Eigen::Quaterniond quat(quat_r, quat_x, quat_y, quat_z);

Eigen::Translation3d trans(tran_x, tran_y, tran_z);

Eigen::Affine3d pose = trans * quat;

poses.push_back(pose);

}

inf.close();

if (poses.size() > 0) {

return true;

} else {

return false;

}

}

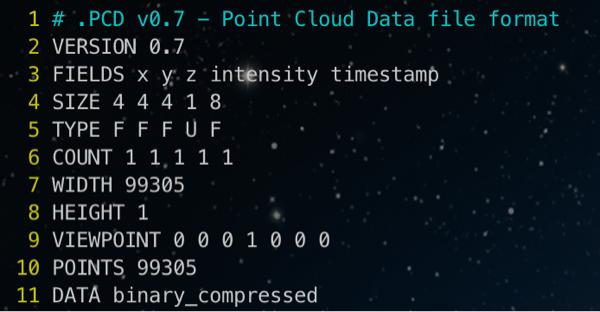

/**@brief register the format header of PCD file of dataset into PCL. */

struct PointXYZIT {

float x;

float y;

float z;

unsigned char intensity;

double timestamp;

EIGEN_MAKE_ALIGNED_OPERATOR_NEW

} EIGEN_ALIGN16;

POINT_CLOUD_REGISTER_POINT_STRUCT (PointXYZIT,

(float, x, x)

(float, y, y)

(float, z, z)

(uint8_t, intensity, intensity)

(double, timestamp, timestamp)

)

/**@brief load pcd file.

* @param <pose_file_path> path of pcd file.

* @param <cloud> a PointXYZIT type pointer to store the content of PCD file.

*/

bool load_pcd_file(

const std::string& load_pcd_path,

pcl::PointCloud<PointXYZIT>::Ptr cloud) {

if (pcl::io::loadPCDFile(load_pcd_path.c_str(), *cloud) != 0) {

std::cerr << "Can't load pcd file from " << load_pcd_path << std::endl;

return false;

}

return true;

}

/**@brief transform a source cloud according to the given pose into the global coordinate system. * @param <pose> The pose of PCD file.

* @param <src_cloud> The source point cloud will be transformed.

* @param <dst_cloud> The transformed result of source point cloud.

*/

void transform_pointcloud(

const Eigen::Affine3d& pose, pcl::PointCloud<PointXYZIT>::Ptr src_cloud, pcl::PointCloud<PointXYZIT>::Ptr dst_cloud) {

pcl::transformPointCloud(*src_cloud, *dst_cloud, pose); }

@inproceedings{L3NET_2019_CVPR,

title={L3-net: Towards learning based lidar localization for autonomous driving},

author={Lu, Weixin and Zhou, Yao and Wan, Guowei and Hou, Shenhua and Song, Shiyu},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

pages={6389--6398},

year={2019}

}